K-means 알고리즘 구현하기

2019, Jan 05

K-means algorithm 구현하기¶

by JunPyo Park

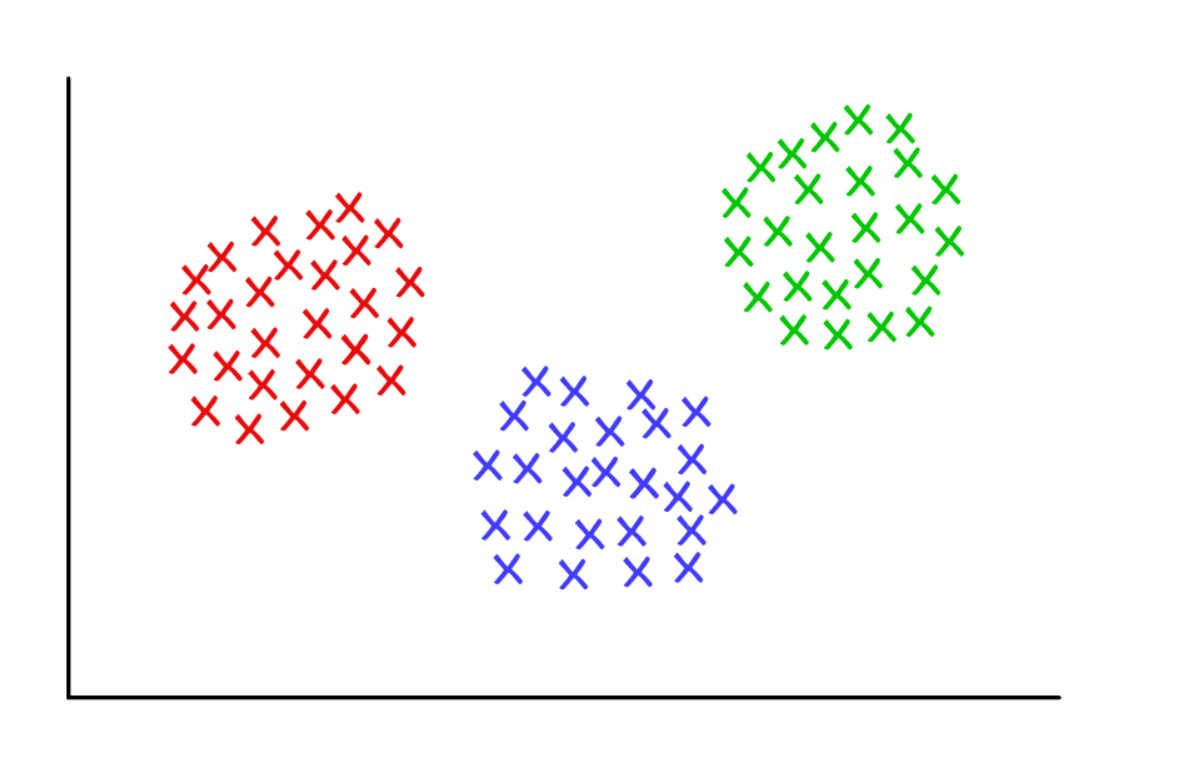

sklearn package 에 내장되어 있는 국민 데이터셋인 Iris 데이터셋을 사용하여 k-means 알고리즘을 구현해 보았습니다.

k=3 인 경우에 해당하는 코드를 먼저 작성하였고 그 다음 임의의 k 값에 대해 작동하도록 함수를 만들어 보았습니다.

함수 내부에서 Jagota index를 계산하여 k에 따른 Jagota index의 변화를 마지막에 출력하였습니다.

Jagota Index?¶

In [1]:

import plotly.plotly as py

import plotly.graph_objs as go

import numpy as np

import pandas as pd

from sklearn import datasets

from jupyterthemes import jtplot

import matplotlib.pyplot as plt

jtplot.style('grade3')

Load Data¶

In [2]:

iris = datasets.load_iris() # load iris dataset

In [3]:

X = iris.data[:, :2] # select two features

X.shape

Out[3]:

Data Visualization¶

In [4]:

trace = go.Scatter(

x = X[:,0],

y = X[:,1],

name = 'Data_Point',

mode = 'markers'

)

data = [trace]

fig = dict(data=data)

py.iplot(fig, filename='basic-scatter')

Out[4]:

Choose Initial Random Points (For the Case k=3)¶

In [5]:

# The number of clusters and data

k = 3

m = X.shape[0]

# ramdomly initialize mean points

mu = X[np.random.randint(0,m,k),:]

pre_mu = mu.copy()

print("Initial 3 Random Point")

print(mu)

In [6]:

trace1 = go.Scatter(

x = mu[:,0],

y = mu[:,1],

name = 'Initial_Random_Point',

mode = 'markers',

marker = dict(

size = 10,

color = 'rgba(255, 182, 193, .9)',

line = dict(

width = 2,

)

)

)

data = [trace, trace1]

fig = dict(data=data)

py.iplot(fig, filename='add_initial_point')

Out[6]:

Running K-means Algorithm¶

In [7]:

y = np.empty([m,1])

tolerance = 1e-15 # Setting the tolerance(stopping criteria), I arbitrarily put this value

iter_num = 0

while True:

iter_num += 1

for i in range(m):

d0 = np.linalg.norm(X[i,:] - mu[0,:],2) # use 2-norm(Euclidean Distance) as a distance measure

d1 = np.linalg.norm(X[i,:] - mu[1,:],2)

d2 = np.linalg.norm(X[i,:] - mu[2,:],2)

y[i] = np.argmin([d0, d1, d2]) # Choose the closest cluster

err = 0

for i in range(k): # Calculate the error

mu[i,:] = np.mean(X[np.where(y == i)[0]], axis=0)

err += np.linalg.norm(pre_mu[i,:] - mu[i,:],2)

pre_mu = mu.copy()

if err < tolerance:

print("Iteration:", iter_num) # if stopping criteria is satisfied, break the loop and print iteration number

break

if iter_num > 9999:

break

In [8]:

color_list = ['rgba(0, 230, 138, .9)','rgba(255, 102, 102, .9)', 'rgba(51, 133, 255, .9)' ]

data = []

for i in np.arange(3):

trace = go.Scatter(

x = X[np.where(y==i)[0]][:,0],

y = X[np.where(y==i)[0]][:,1],

name = 'Cluster_' + str(i+1),

mode = 'markers',

marker = dict(

size = 10,

color = color_list[i],

)

)

data.append(trace)

fig = dict(data=data)

py.iplot(fig, filename='clustering_result')

Out[8]:

Making the function for arbitrary k¶

In [126]:

def k_means(X,k):

m = X.shape[0]

# ramdomly initialize mean points

mu = X[np.random.randint(0,m,k),:]

pre_mu = mu.copy()

y = np.empty([m,1])

tolerance = 1e-15 # Setting the tolerance(stopping criteria), I arbitrarily put this value

iter_num = 0

while True:

iter_num += 1

for i in range(m):

distance = []

for j in range(k):

distance.append(np.linalg.norm(X[i,:] - mu[j,:],2)) # use 2-norm(Euclidean Distance) as a distance measure

y[i] = np.argmin(distance) # Choose the closest cluster

err = 0

for i in range(k): # Calculate the error

mu[i,:] = np.mean(X[np.where(y == i)[0]], axis=0)

err += np.linalg.norm(pre_mu[i,:] - mu[i,:],2)

pre_mu = mu.copy()

if err < tolerance:

print("Iteration:", iter_num) # if stopping criteria is satisfied, break the loop and print iteration number

data = []

for i in np.arange(k):

trace = go.Scatter(

x = X[np.where(y==i)[0]][:,0],

y = X[np.where(y==i)[0]][:,1],

name = 'Cluster_' + str(i+1),

mode = 'markers',

marker = dict(

size = 10,

)

)

data.append(trace)

jagota_measure=0

y = pd.Series(y[:,0])

for i in range(m): # calculating Jagota Measure(Q measure)

num = len(y.where(y==y[i]).dropna())

jagota_measure += np.linalg.norm(X[i,:] - mu[int(y[i]),:],2) / num

fig = dict(data=data)

return(fig,jagota_measure)

break

if iter_num > 9999:

break

Result for k=3¶

In [132]:

py.iplot(k_means(X,3)[0])

Out[132]:

Jagota Measure for k=3

In [130]:

k_means(X,3)[1]

Out[130]:

Result for k=5¶

In [98]:

py.iplot(k_means(X,5))

Out[98]:

Jagota Measure for k=5

In [168]:

k_means(X,5)[1]

Out[168]:

Plot Q measure for different k¶

In [161]:

x = np.arange(6)+2

y = []

for k in x:

y.append(k_means(X,k)[1])

In [167]:

plt.figure(figsize=(14,7))

plt.title('Q measure for k-means clustering')

plt.xlabel('k')

plt.ylabel('Jagota Measure(=Q)')

plt.plot(x,y)

plt.show()