파이썬 모듈 - Pyod 사용해보기

2020, Nov 09

Pyod 사용해보기¶

Table of Contents

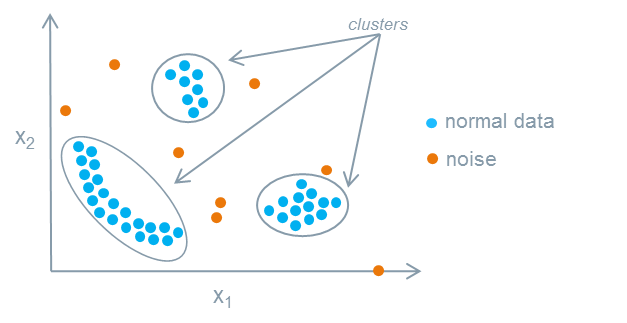

PyOD is a comprehensive and scalable Python toolkit for detecting outlying objects in multivariate data. This exciting yet challenging field is commonly referred as Outlier Detection or Anomaly Detection.

아웃라이어나 아노말리(이상치) 탐지를 위해 만들어진 모듈

201110 기준 Numpy==1.19.4 에서 에러남, Numpy==

1.19.3버젼 에서 실행됨

모듈 불러오기¶

In [1]:

from pyod.utils.example import visualize

from pyod.utils.data import evaluate_print

from pyod.utils.data import generate_data

from pyod.models.knn import KNN

In [2]:

contamination = 0.1 # percentage of outliers

n_train = 200 # number of training points

n_test = 100 # number of testing points

데이터 생성¶

In [3]:

# Generate sample data

X_train, y_train, X_test, y_test = \

generate_data(n_train=n_train,

n_test=n_test,

n_features=2,

contamination=contamination,

random_state=42)

In [4]:

X_train.shape, y_train.shape

Out[4]:

In [5]:

X_test.shape, y_test.shape

Out[5]:

모델 피팅¶

In [6]:

clf_name = 'KNN'

clf = KNN()

clf.fit(X_train)

Out[6]:

In [7]:

# 예측된 결과

clf.labels_

Out[7]:

Evaluation¶

In [8]:

y_train_pred = clf.labels_

y_train_scores = clf.decision_scores_

In [9]:

y_test_pred = clf.predict(X_test)

y_test_scores = clf.decision_function(X_test)

In [10]:

print("On Training Data:")

evaluate_print(clf_name, y_train, y_train_scores)

print("\nOn Test Data:")

evaluate_print(clf_name, y_test, y_test_scores)

시각화¶

In [11]:

# visualize the results

visualize(clf_name, X_train, y_train, X_test, y_test, y_train_pred,

y_test_pred, show_figure=True, save_figure=True)